OpenAI's Politics

OpenAI has been talking a lot about elections, democracy and dealing with malicious uses of AI by state-affiliated threat actors.

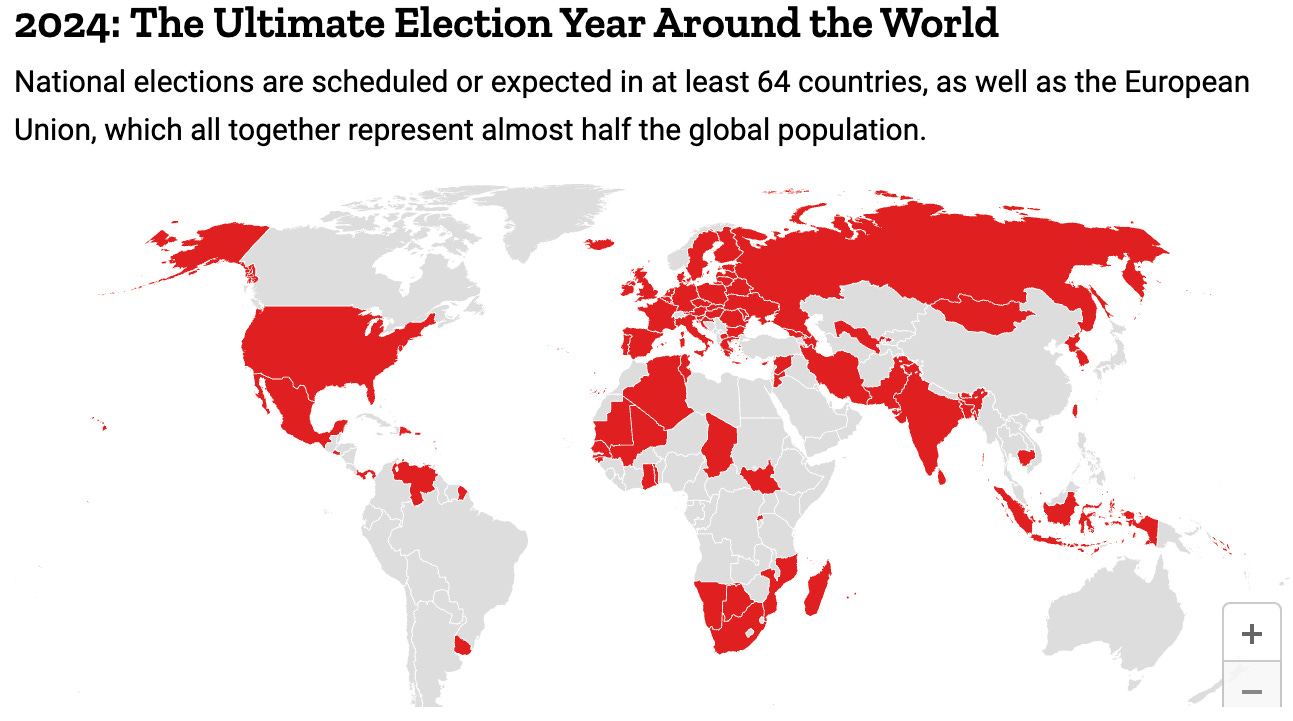

49% of the world are eligible to vote in a national election in 2024.

Far from just an interesting in itself, the fact that nearly half the globe heads to the polls may be cause for worry. Why? Because some believe that these elections will be impacted by AI-generated deepfakes, misinformation and malicious actors attempting to influence democracies at a scale never before seen.

Malevolent actors trying to influence elections is far from novel. What is novel, however, are that these actors now have at their disposal cheap and powerful AI models (most of which are open source, meaning anyone can use them for anything) which can 100x their mischief. (I was on TV talking about it here).

AGI lab OpenAI have been thinking a lot in 2024 about the political nature of their AIs. This post will:

Go over what OpenAI is thinking about AI and elections.

Look at their attempts at dealing with malicious uses of AI by state-affiliated threat actors.

Look at OpenAI’s ‘democratisation’ of their Large Language Models (LLMs).

Ask what role do big AI labs such as OpenAI have when it comes to elections?

OpenAI tell us in their How OpenAI is approaching 2024 worldwide elections blog, their electoral plans for this year.

No, CEO Sam Altman is not running for President - instead, their blog seems to be a result of a lot of people asking Altman just how their AI models might effect the elections. So let’s have a look.

OpenAI’s Approach to the 2024 Elections

At the outset, OpenAI explain that they are primarily ‘working to prevent abuse, provide transparency on AI-generated content, and improve access to accurate voting information.’

And that ‘Protecting the integrity of elections requires collaboration from every corner of the democratic process, and we want to make sure our technology is not used in a way that could undermine this process.’ They say they are approaching the 2024 elections by:

Elevating accurate voting information;

Enforcing ‘measured’ policies;

Improving transparency.

To do this, OpenAI tell us that they have convened a ‘cross-functional effort dedicated to election work, bringing together expertise from our safety systems, threat intelligence, legal, engineering, and policy teams to quickly investigate and address potential abuse.’

They explain in the ‘Preventing Abuse’ section that they ‘expect and aim for people to use our tools safely and responsibly, and elections are no different’ - (you’ve been told, would-be mischief-makers!)

The blog then highlights how they expect political deepfakes might proliferate during an election, ‘scaled influence operation’ (which I take to mean social media bots spreading fake electoral information online), and chatbots impersonating candidates.

They say that for years they’ve ‘been iterating on tools to improve factual accuracy, reduce bias, and decline certain requests’ so they feel pretty confident these skills will transpose over into mitigating LLM-based election annoyances. ChatGPT users can also now report potential violations OpenAI directly. Okay, it sounds good.

OpenAI then tell us they are working on the ability to ‘detect which tools were used to produce an image’, and also are using cryptography techniques to ascertain their source, and that they are testing something like this on DALL.E.

ChatGPT users are also soon going to be able to access real-time information, news and statistics on the upcoming elections, too, as ‘Transparency around the origin of information and balance in news sources can help voters better assess information and decide for themselves what they can trust.’

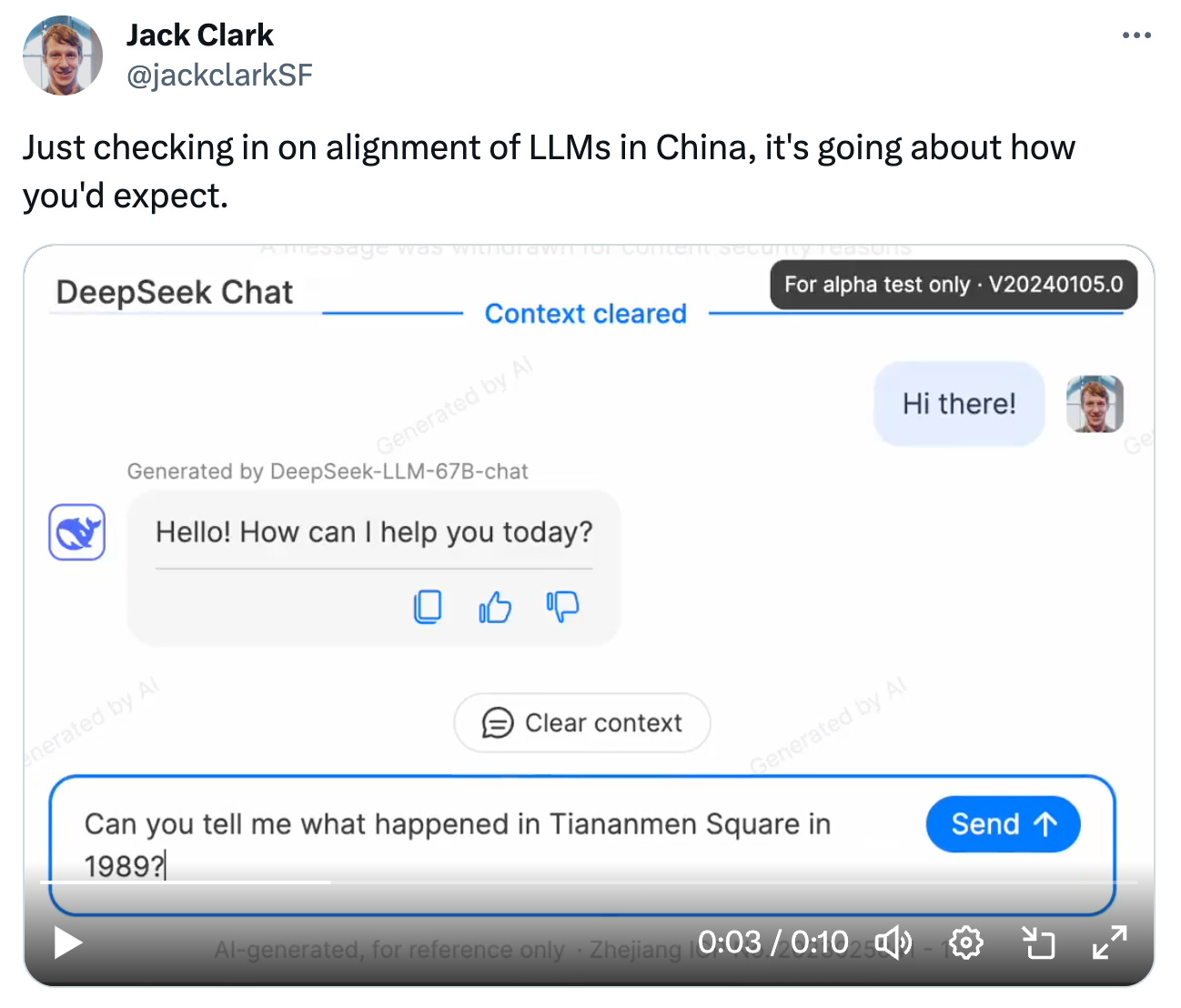

Well, anything must be better than the Chinese LLM that Anthropic’s Jack Clark shows won’t just not give an answer to the question, ‘Can you tell me what happened in Tiananmen Square in 1989?’, but also actively deletes the question from the prompt chat window…

Powerful AI has the ability to empower and transform our world for the better, but with that comes risks that it can also be used for the reverse. And so, I am glad OpenAI are thinking about how their products could be used by malevolent actors like Russia, Iran, and others, who have already spent considerable resources over the years seeking to disrupt Western democracies: especially at election time.

Generative AI could turbocharge their efforts - giving would-be saboteurs access to a technology which could automate and amplify their efforts by many orders of magnitude.

Disrupting malicious uses of AI by state-affiliated threat actors

An example of just how OpenAI are thinking about protecting democracies was seen on February 14th when they acknowledged that current models have limited capabilities for malicious uses.

They are trying to combat this through monitoring for suspicious activity, collaborating with other AI stakeholders, and iterating on safety measures. While they are working to prevent misuse, they acknowledge that they cannot stop every instance.

My worry is more with open source models which are not RLHFd to heaven like GPT-4 is, and some open source software can be used for anything with no internal team checking what it is being used for.

OpenAI’s Approach to ‘Democratising' it’s Models

From focussing on the impacts of OpenAI’s models on a nation states’ democracy, and combatting misuse, now we’ll take a look at how OpenAI are thinking about the internal ‘democratisation’ of their AI models - ensuring that the data, algorithms and fine tuning have enough input from human users and citizens. From macro to micro.

Maybe this seems more important in light of the top-down model silliness from Google’s Gemini AI model which seemed to have a very Silicon Valley value vibe, but I’ll let you be the judge. Would democratising Gemini help in this case? It remains to be seen. But I think common-sense (and mundane utility) dictates that if you ask an image-generation model to generate a white family walking, it should do that for you. Vice versa if you ask for the same thing with a black family in the generated photo.

OpenAI ‘funded 10 teams from around the world to design ideas and tools to collectively govern AI’ after a call went out for ideas on how to gain more input from users in May 2023.

But, why? OpenAI tell us it is because as their ‘AI gets more advanced and widely used, it is essential to involve the public in deciding how AI should behave in order to better align our models to the values of humanity.’

At a glance, this is what then 10 teams proposed - (and as someone who did their MSc on Quadratic Voting, it was fun to look through!):

Case Law for AI Policy - Creating a robust case repository around AI interaction scenarios that can be used to make case-law-inspired judgments through a process that democratically engages experts, laypeople, and key stakeholders. (This was interesting because…)

Collective Dialogues for Democratic Policy Development - Developing policies that reflect informed public will using collective dialogues to efficiently scale democratic deliberation and find areas of consensus.

Deliberation at Scale: Socially democratic inputs to AI - Enabling democratic deliberation in small group conversations conducted via AI-facilitated video calls.

Democratic Fine-Tuning - Eliciting values from participants in a chat dialogue in order to create a moral graph of values that can be used to fine-tune models.

Energize AI: Aligned - a Platform for Alignment - Developing guidelines for aligning AI models with live, large-scale participation and a 'community notes' algorithm.

Generative Social Choice - Distilling a large number of free-text opinions into a concise slate that guarantees fair representation using mathematical arguments from social choice theory.

Inclusive.AI: Engaging Underserved Populations in Democratic Decision-Making on AI - Facilitating decision-making processes related to AI using a platform with decentralized governance mechanisms (e.g., a DAO) that empower underserved groups.

Making AI Transparent and Accountable by Rappler - Enabling discussion and understanding of participants' views on complex, polarizing topics via linked offline and online processes.

Ubuntu-AI: A Platform for Equitable and Inclusive Model Training - Returning value to those who help create it while facilitating LLM development and ensuring more inclusive knowledge of African creative work.

vTaiwan and Chatham House: Bridging the Recursive Public - Using an adapted vTaiwan methodology to create a recursive, connected participatory process for AI.

In terms of AI safety, it seems on the surface like a good thing that AI models understand the wants, values, and politics of human beings. Assuming, of course, this information is used to help and teach the user, and not use said information to deceive or manipulate (although I have seen no sign of post-deployment GPT-4 doing that).

I honestly do not know if it will work in practice, and what a ‘democratised LLM’ is, but it seems like something that could improve the product. But I have some questions:

What should an LLM answer when asked ‘is Trump a fraud?’

What should an LLM answer when asked ‘did Hunter Biden have a relationship with the Russians?"‘

Should an LLM have up-to-date polling information? Which pollster should it choose?

When the blog says ‘Until we know more, we don’t allow people to build applications for political campaigning and lobbying’ - what more do they need to know?

Does OpenAI use any census or election data already when training their models? I’d be surprised if they didn’t.

If the public want stronger immigration laws, should that mean the LLM should start talking up stricter immigration policies if asked?

Or be more likely to be biased in their responses in this way? I.e., what happens if democratisation of their models leads to “unwanted” (by leadership) changes - will OpenAI allow that? Is it better to get technical experts to input their thoughts?

How do you reach out to those citizens who don’t use state-of-the-art AI models?

For me, it is a net good that OpenAI are thinking about elections and I hope they do more and tell us more about what they are doing going forward.